In the dynamic landscape of artificial intelligence, Ollama emerges as a versatile framework, empowering users to harness the capabilities of large language models on their local machines. Ollama facilitates seamless interaction with pre-built models, customization through prompts, and integration into various applications.

Installation and Getting Started: Getting started with Ollama is a breeze, especially for Linux and WSL2 users. A simple one-liner using curl allows for quick installation, ensuring users have the tools they need to delve into the world of language models.

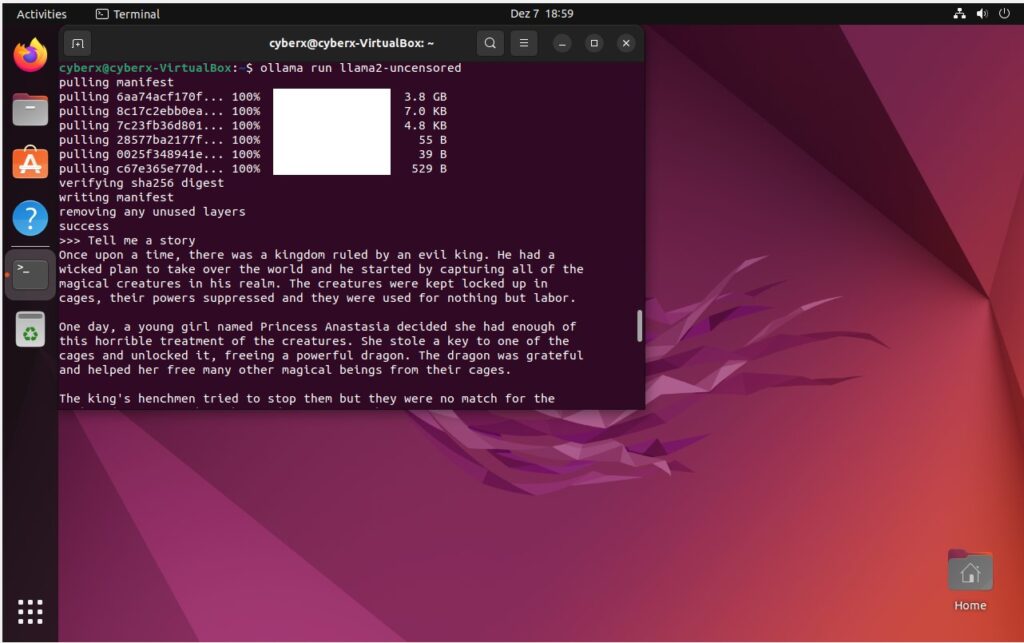

curl https://ollama.ai/install.sh | shA Pantheon of Models: Ollama boasts an impressive array of pre-built models, each tailored for different applications. Whether you’re diving into Neural Chat for conversational AI, exploring Starling for language modeling, or tapping into Llama 2 for a versatile experience, Ollama has you covered.

ollama run neural-chat

ollama run starling-lm

ollama run llama2

Hardware Specifications:

- Processor: Intel i5 4670

- RAM: 16GB DDR3

- Virtualization: VirtualBox (4Cores 8GB)